在昨日的【Day - 13】中,我們對Prompt Engineering有了更深入的理解。今天,我們將運用【Day - 8】所介紹的API,進行實際的串接實作。在接下來的聊天實作部分,我會使用GPT-4作為主要的模型。

首先,我們要依照【Day - 8】的介紹,建立相對應的GPT API Model。這樣一來,我們稍後就可以直接使用:

export type ChatRole = 'system' | 'user' | 'assistant';

export interface ChatMessageModel {

role?: ChatRole;

name?: string;

content: string;

}

export interface ChatRequestModel {

model: string;

messages: ChatMessageModel[];

temperature?: number;

top_p?: number;

stream?: boolean;

max_tokens?: number;

}

export interface ChatResponseModel {

id: string;

object: string;

created: number;

model: string;

choices: ChatChoicesModel[];

usage: ChatUsageModel

}

export interface ChatChoicesModel {

index: number;

message: ChatMessageModel;

finish_reason: string;

}

export interface ChatUsageModel {

prompt_tokens: number;

completion_tokens: number;

total_tokens: number;

}

我們建立一個OpenAI Service,在openai.service.ts中注入HttpClient進行API的呼叫。同時,我們也準備了一個陣列來保存所有的歷史對話,並將「System Prompt」加入其中。在呼叫OpenAI的API時,會把語音轉換成的文字和先前的歷史對話合併後傳送API,然後接收來自GPT-4模型的回應。最後,這些回應會被加入到歷史對話陣列中,以便後續能夠持續與GPT-4模型進行上下文相關的對話:

//建立Http header

private headers = new HttpHeaders({

'Authorization': 'Bearer {你的Token}'

});

private chatMessages: ChatMessageModel[] = [

{

role: 'system',

content: '1.從現在開始你是英文口說導師,所有對話都使用英文。\

2.你的能力是和學生進行生活一對一會話練習,若學生有不會的單子或句子可以使用中文解釋。\

3.學生的程度大約落在多益(400-600)分,請你依照這個等級進行問話。\

4.進行會話練習時,儘可能的導正學生語法上的錯誤。\

5.你要適時地開啟新的日常話題。'

},

];

constructor(private http: HttpClient) { }

public chatAPI(contentData: string) {

//添加使用者訊息

this.addChatMessage('user', contentData);

return this.http.post<ChatResponseModel>('https://api.openai.com/v1/chat/completions', this.getConversationRequestData(), { headers: this.headers }).pipe(

//加入GPT回覆訊息

tap(chatAPIResult => this.addChatMessage('assistant', chatAPIResult.choices[0].message.content))

);

}

private getConversationRequestData(): ChatRequestModel {

return {

model: 'gpt-4',

messages: this.chatMessages,

temperature: 0.7,

top_p: 1

}

}

private addChatMessage(roleData: ChatRole, contentData: string) {

this.chatMessages.push({

role: roleData,

content: contentData

});

}

我們將OpenAI Service注入到Home主頁的home.page.ts後,把chatAPI()方法添加到OnGetRecordingBase64Text()裡面:

//建立Http header

private headers = new HttpHeaders({

'Authorization': 'Bearer {你的Token}'

});

constructor(private http: HttpClient,

private statusService: StatusService,

private openaiService: OpenaiService) { }

OnGetRecordingBase64Text(recordingBase64Data: RecordingData) {

const requestData: AudioConvertRequestModel = {

aacBase64Data: recordingBase64Data.value.recordDataBase64

};

//啟動讀取

this.statusService.startLoading();

//Audio Convert API

this.http.post<AudioConvertResponseModel>('你的Web APP URL/AudioConvert/aac2m4a', requestData).pipe(

switchMap(audioAPIResult => {

//將Base64字串轉為Blob

const byteCharacters = atob(audioAPIResult.m4aBase64Data);

const byteNumbers = new Array(byteCharacters.length);

for (let i = 0; i < byteCharacters.length; i++) {

byteNumbers[i] = byteCharacters.charCodeAt(i);

}

const byteArray = new Uint8Array(byteNumbers);

const blob = new Blob([byteArray], { type: 'audio/m4a' });

//建立FormData

const formData = new FormData();

formData.append('file', blob, 'audio.m4a');

formData.append('model', 'whisper-1');

formData.append('language', 'en');

//Whisper API

return this.http.post<WhisperResponseModel>('https://api.openai.com/v1/audio/transcriptions', formData, { headers: this.headers })

}),

//Chat API

switchMap(whisperAPIResult => this.openaiService.chatAPI(whisperAPIResult.text)),

finalize(() => {

//停止讀取

this.statusService.stopLoading();

})

).subscribe(result => alert(result.choices[0].message.content));

}

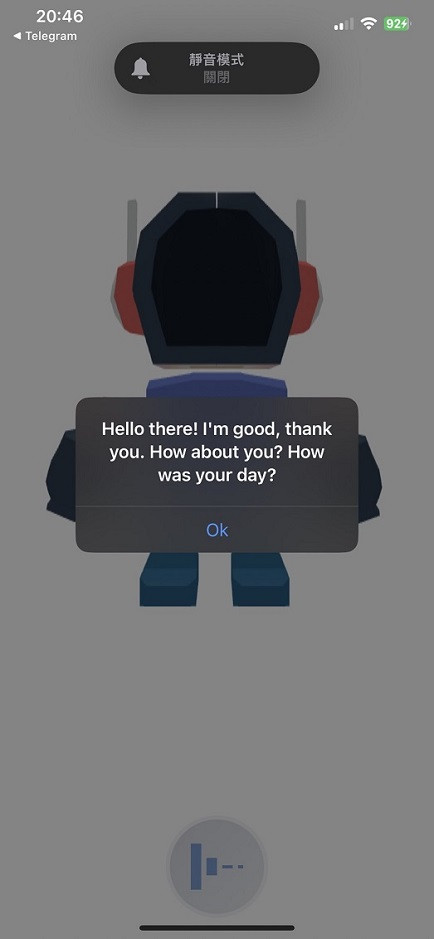

完成以上的程式碼並編譯後,我們嘗試在實體機上對GPT-4模型說:「Hi there!How are you?」,等待API完成後,就可以看到GPT-4模型根據我們的語音給出對應的回答,成功實現了和AI對話的功能。

最後,我們將原本的OnGetRecordingBase64Text()方法中的Whisper API呼叫整合到OpenAI Service裡,並且將其命名為whisperAPI()。如此一來,我們就能在同一個地方輕鬆管理和調用所有與OpenAI API相關的功能了:

public whisperAPI(base64Data: string) {

return this.http.post<WhisperResponseModel>('https://api.openai.com/v1/audio/transcriptions', this.getWhisperFormData(base64Data), { headers: this.headers });

}

private getWhisperFormData(base64Data: string) {

//將Base64字串轉為Blob

const byteCharacters = atob(base64Data);

const byteNumbers = new Array(byteCharacters.length);

for (let i = 0; i < byteCharacters.length; i++) {

byteNumbers[i] = byteCharacters.charCodeAt(i);

}

const byteArray = new Uint8Array(byteNumbers);

const blob = new Blob([byteArray], { type: 'audio/m4a' });

//建立FormData

const formData = new FormData();

formData.append('file', blob, 'audio.m4a');

formData.append('model', 'whisper-1');

formData.append('language', 'en');

return formData;

}

這樣OnGetRecordingBase64Text()方法就會變得乾淨許多:

OnGetRecordingBase64Text(recordingBase64Data: RecordingData) {

const requestData: AudioConvertRequestModel = {

aacBase64Data: recordingBase64Data.value.recordDataBase64

};

//啟動讀取

this.statusService.startLoading();

//Audio Convert API

this.http.post<AudioConvertResponseModel>('你的Web APP URL/AudioConvert/aac2m4a', requestData).pipe(

//Whisper API

switchMap(audioAPIResult => this.openaiService.whisperAPI(audioAPIResult.m4aBase64Data)),

//Chat API

switchMap(whisperAPIResult => this.openaiService.chatAPI(whisperAPIResult.text)),

finalize(() => {

//停止讀取

this.statusService.stopLoading();

})

).subscribe(result => alert(result.choices[0].message.content));

}

今天我們成功將OpenAI的GPT-4模型串接起來,透過GPT語言模型實現了擬真的對話功能。同時,我們也將所有與Whisper API相關的功能集中整合到Service中,使程式碼更加整潔和結構化。

Github專案程式碼:Ionic結合ChatGPT - Day14